Savonia Article Pro: Object Detection Metrics: Flood vs Water Detection Use Case

Savonia Article Pro is a collection of multidisciplinary Savonia expertise on various topics.

This work is licensed under CC BY-SA 4.0

Introduction: The Importance of Choosing the Right Metrics in Object Detection Projects

Computer vision technologies are now widely used in daily life and industrial applications. Especially with the development of new artificial intelligence models, their use has become easier. In object detection projects, different metrics are used to evaluate the performance of the model. Precision, Recall, mAP (Mean Average Precision), and F1-score have varying degrees of importance depending on the requirements of the project. It is important to determine which metrics should be used or considered in projects.

In this article, we will analyze these metrics through a flood vs. puddle detection project and discuss which metric is more critical for different types of projects.

Object Detection Metrics and Their Importance

When evaluating object detection models, it is crucial to use well-defined metrics to understand their performance. Different metrics highlight various aspects of model behavior, such as accuracy, robustness, and error rates. Below are the key metrics used in object detection, particularly in flood and water detection use cases.

Precision (P)

Precision measures the ratio of correctly predicted positive cases to all positive (true positives and false positives) predictions made by the model. In simpler terms, it addresses the question: “Out of all the objects that the model identified as flood or water, how many were actually accurate?”

Precision=TP/(TP+FP)

where:

TP (True Positives): Correctly detected flood/water regions.

FP (False Positives): Incorrectly detected flood/water regions (false alarms).

It is critical for the applications where false alarms/warnings must be minimized. For example, security systems, healthcare (cancer detection), and autonomous vehicles where false positives can lead to unnecessary interventions. In flood detection, false alarms can lead to unnecessary evacuations, wasted resources, and reduced public trust. A high precision model ensures that when a flood is detected, it is highly likely to be correct.

Recall (R)

Recall measures the proportion of real objects correctly detected by the model. It answers the question: “Out of all the real flood or water areas, how many did the model correctly detect?”

Recall=TP/(TP+FN)

where:

TP (True Positives): Correctly detected flood/water regions.

FN (False Negatives): Actual flood/water regions that the model failed to detect.

It is essential for the applications where missing detections/identifications can have severe consequences. For example, medical diagnosis, disaster response, and flood detection where missing a real case can be dangerous. In flood detection, a low recall means the model is failing to detect real flood areas, which can lead to catastrophic outcomes such as delayed emergency responses and increased damage. Because of this, recall is one of the most crucial metrics in flood detection.

F1-Score (Harmonic Mean of Precision and Recall)

The F1-score provides a balance between precision and recall, ensuring that both false positives and false negatives are considered.

F1=2*(Precision*Recall)/(Precision+Recall)

F1-Score is useful when a trade-off between precision and recall is needed. For example, in flood detection, a model should neither trigger too many false alarms (low precision) nor miss real floods (low recall). The higher the F1-Score, the better the overall performance of the model.

Mean Average Precision (mAP)

The mAP is a widely used object detection metric that evaluates model performance across different confidence thresholds and object sizes.

Variants:

mAP@50 (IoU = 0.5): Measures accuracy when the Intersection over Union (IoU) between the predicted and ground-truth bounding box is at least 50%. This is a relatively lenient metric. mAP@50 is useful for general performance evaluation.

mAP@50-95 (IoU = 0.5 to 0.95): A more detailed evaluation that measures accuracy across multiple IoU thresholds (0.50, 0.55, …, 0.95). This is a stricter and more realistic metric, rewarding models that have precise localization. mAP@50-95 is a stricter test and is valuable for comparing models.

In flood detection, Higher mAP values indicate that the model is not only detecting floods and water regions correctly but also accurately localizing them.

Which Metric Is More Important for Each Project?

Selecting the appropriate metric for object detection is determined by the project’s specific objectives. In flood detection, recall and mAP@50-95 are essential due to the severe consequences of undetected floods. For security camera face detection, precision is prioritized to minimize false alarms. In medical screening (cancer detection), recall is critical because missing a case can have serious health implications. Autonomous vehicles (traffic sign recognition) depend on mAP@50 and F1-Score for accurate and safe navigation.

In wildlife monitoring, recall ensures rare animals are detected. Retail theft detection emphasizes precision to reduce false accusations. Defect detection in manufacturing utilizes mAP@50-95 to identify all defects accurately. Weapon detection in public spaces requires high recall to address security threats effectively. Each project selects a metric based on balancing the risks associated with false positives and false negatives.

Flood and Water Detection Using YOLOv8 – A Practical Implementation

Flood detection is crucial for early warning systems, helping to prevent damage, economic loss, and casualties. However, not all water accumulation indicates flooding. Differentiating between general water surfaces (e.g., puddles, lakes) and actual flood conditions is essential to avoid false alarms in detection systems.

Object Detection Model

YOLO (You Only Look Once) is a real-time object detection algorithm known for its speed and accuracy. In this use case, YOLOv8 was chosen for its real-time performance, efficiency, and flexibility in object detection tasks.

Dataset Collection

We used multiple datasets from the Roboflow Universe platform to ensure robust detection of flood versus non-flood water accumulation. Roboflow Universe provides annotated datasets for computer vision tasks, enabling quick adaptation without manual labeling.

For our project, we combined four datasets: two containing water images and two focused on flood images, creating a comprehensive dataset with 9467 images. Additionally, background images were added to enhance model robustness. These datasets help distinguish between general water accumulation and actual flooding, improving real-world applicability and preventing false alarms in flood detection systems.

Training and Implementation

Model: YOLOv8 (anchor-free detection)

Hyperparameters: Learning rate: 1e-3, Batch size: 16, Epochs: 100, Optimizer: AdamW

Train/Valid/Test Split: 70%-20%-10%

Augmentation: Not applied in the final model

Experimental Results

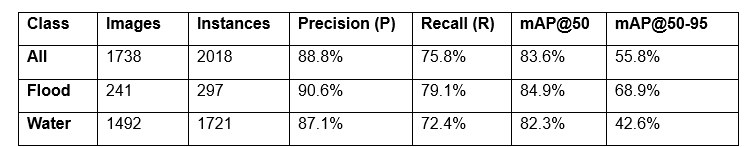

The best-performing model was trained with background images, 100 epochs, and augmentation disabled. The key evaluation metrics for this model were:

Table 1: General results of AI Training

Table 2: Evaluation Metrics Summary

Flood vs. Water Detection

Analyzing the model’s performance in distinguishing between flood and water accumulation:

Flood Precision (90.6%): This high precision value indicates that the model reliably detects flood scenarios with minimal false alarms.

Water Recall (72.4%): The relatively lower recall suggests that some water surfaces were missed, likely due to environmental factors such as light reflections or background textures. This highlights an area for improvement.

Image 1: Examples of the YOLOv8 model detecting floods and water

Conclusion

In this article, we analysed the importance of various object detection metrics in flood and water detection. Precision, recall, F1-score, and mAP are crucial for evaluating model performance, each serving different project needs. For flood detection, recall and mAP@50-95 are vital due to the severe consequences of undetected floods. Precision is essential in minimizing false alarms in applications like security and healthcare.

Our practical implementation using YOLOv8 demonstrated the importance of distinguishing between general water accumulation and actual flooding. The model performed well in detecting floods with high precision and recall, though there is room for improvement in handling complex scenarios.

Selecting the right evaluation metrics ensures that object detection models meet the specific needs and challenges of each application.

References

1. Roboflow Universe: https://universe.roboflow.com

2. YOLOv8 Official Documentation: https://ultralytics.com/yolov8

Authors:

Osman Torunoglu, RDI Specialist, DigiCenter, Savonia-ammattikorkeakoulu Oy, osman.torunoglu@savonia.fi

Shahbaz Baig, RDI Specialist, DigiCenter, Savonia-ammattikorkeakoulu, shahbaz.baig@savonia.fi

Rosa Sartjärvi, RDI Specialist, Water Safety, Savonia-ammattikorkeakoulu, rosa.sartajarvi@savonia.fi

Petri Juntunen, RDI Specialist, Water Safety, Savonia-ammattikorkeakoulu, petri.juntunen@savonia.fi

Paola Rosales Suazo De Kontro, RDI Specialist, Hyvinvointi, Savonia-ammattikorkeakoulu, paola.kontro@savonia.fi

Aki Happonen, Digital Development Manager, DigiCenter, Savonia-ammattikorkeakoulu, aki.happonen@savonia.fi