Savonia Article: Potential of quadruped robots – manipulation of a robot body and arm

This work is licensed under CC BY-SA 4.0

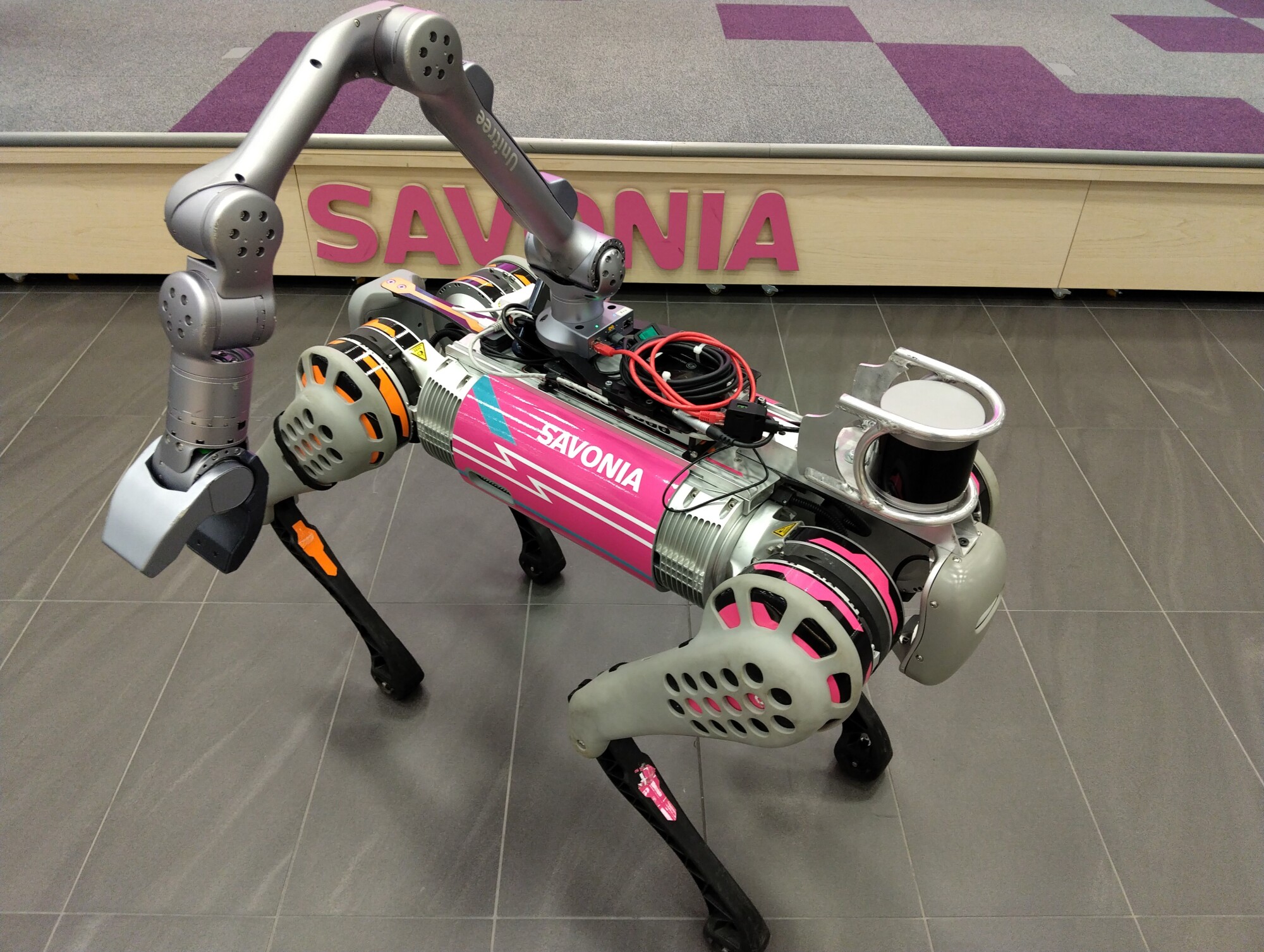

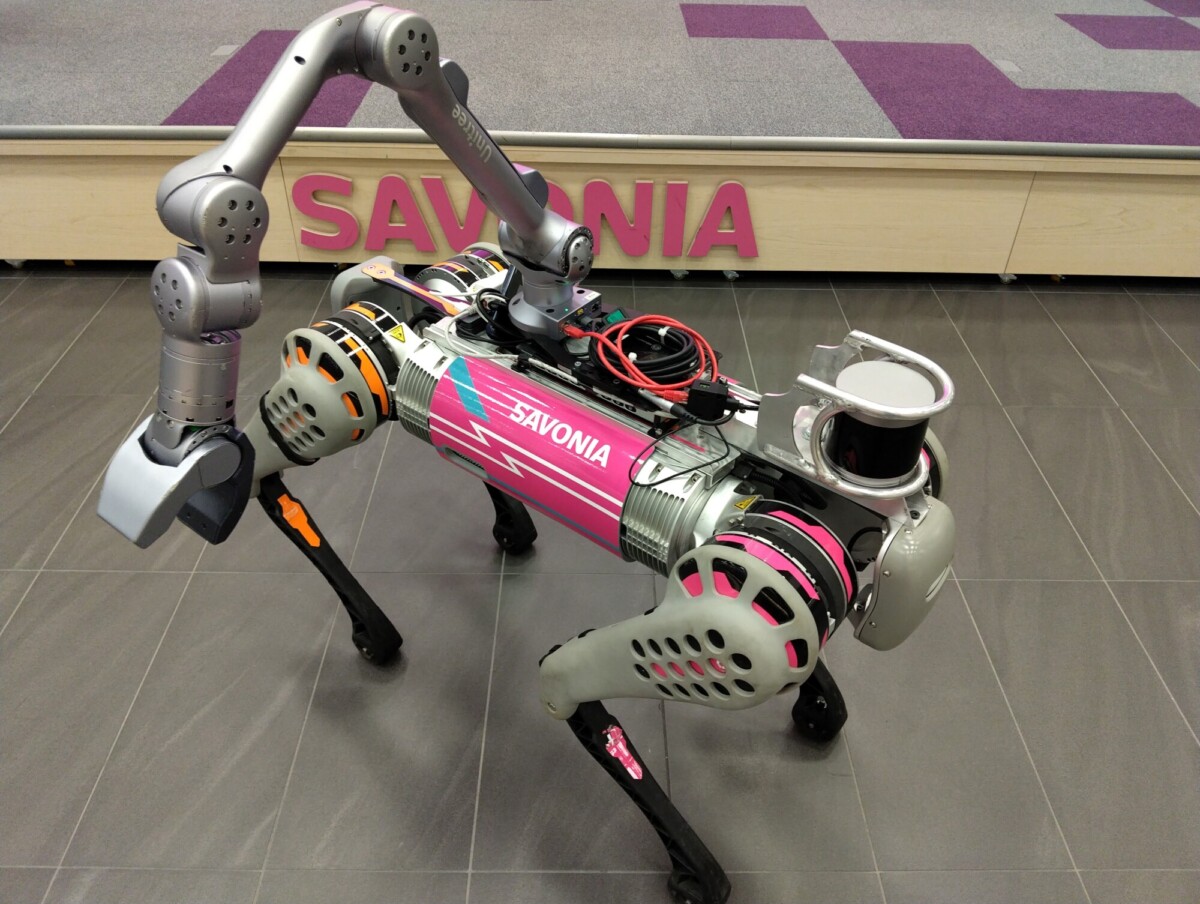

In recent years, quadruped robots, often referred to as “robot dogs,” have gained significant attention for their ability to perform tasks in environments that are challenging for traditional wheeled robots. These 4-legged robots can be used for a variety of applications, from search and rescue missions in disaster zones to routine inspections in industrial settings. As part of the TutkA project a Unitree B1 robot arrived to DigiCenter this summer and got given the name Bob. The addition of the Unitree Z1 robotic arm further enhances Bob’s capability, enabling it to interact with its surroundings in more complex ways, such as lifting an object or collecting material. This article explores the development of controlling both the robot’s body and its arm in order to achieve seamless movement and manipulation.

Programming the movements

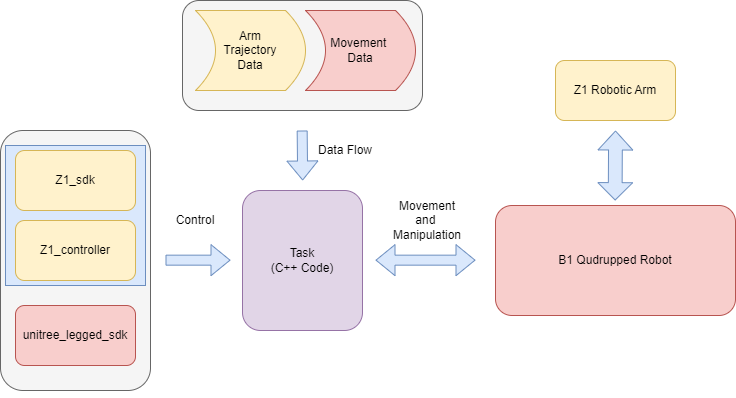

The first goal with Bob was to achieve precise movement between two points. This was accomplished through C++ programming language and leveraging the capabilities of the unitree_legged_sdk (software development kit). Early on, it was discovered that Bob possessed both low- and high-level controls. Low-level control required suspending Bob off the ground, allowing it to move each limb individually through executables. After mastering the coordination of the three joints in each leg, it was time to advance to high-level control, where Bob began in a standard standing position. Using various mode types, Bob was able to walk, kneel, rise, climb stairs and turn through the executables.

Utilizing the robotic arm

The SDK provided essential tools to control Bob’s 12 leg joints and its robotic arm with 7 joints, each powered by a motor. Working with the Z1 arm was particularly challenging as movement in one joint influenced the others, requiring a deep spatial understanding to predict the resulting changes in position. Conclusion was that the simplest way to use the Z1 arm was to utilize a “teach” function offered by Z1_sdk, which enabled manual trajectory recording by manipulating the arm. Data from each point of the trajectory got saved into a csv-file and this coordination information was used to move the arm from one position to another. Recorded arm movements illustrated for example how to collect an item from the floor. Important part of executing the trajectories was to run them together with z1_controller executable which served as the control program interacting with the z1_sdk.

Conclusions

The final stage involved integrating both arm and body movements through a bash script. Bob was able to demonstrate this successfully in TutkA-presentations and provided lots of thoughts for the future development. One idea coming from the audience was to mount a thermal camera to Bob and monitor livestock or crop. The process of getting to know Bob highlighted the complexity of quadruped robots and emphasized the importance of strong teamwork and precise documentation.

Video: https://www.linkedin.com/feed/update/urn:li:activity:7219229596834033665/

This work is funded by Opetus- ja kulttuuriministeriö/Undervisnings- och kulturministeriet/Ministry of Education and Culture and done as part of the TutkA-project (TutkimusAineiston ja tutkimuksen digitalisointi – yhtenäinen tutkimusaineiston keruu- ja käsittely-ympäristö, OKM/16/523/2023).

Contributors:

Iiris Hare, Project Worker, iiris.hare@savonia.fi

Bishwatma Khanal, Project Worker, bishwatma.khanal@savonia.fi

Osman Torunoglu, R&D Specialist, osman.torunoglu@savonia.fi

Premton Canamusa, R&D Specialist, premton.canamusa@savonia.fi

Mika Leskinen, R&D Specialist, mika.leskinen@savonia.fi

Timo Lassila, R&D Specialist, timo.lassila@savonia.fi

Aki Happonen, Project Manager, aki.happonen@savonia.fi