Savonia Article: Enhancing quadruped robot navigation with ROS and Navigation Stack

This work is licensed under CC BY-SA 4.0

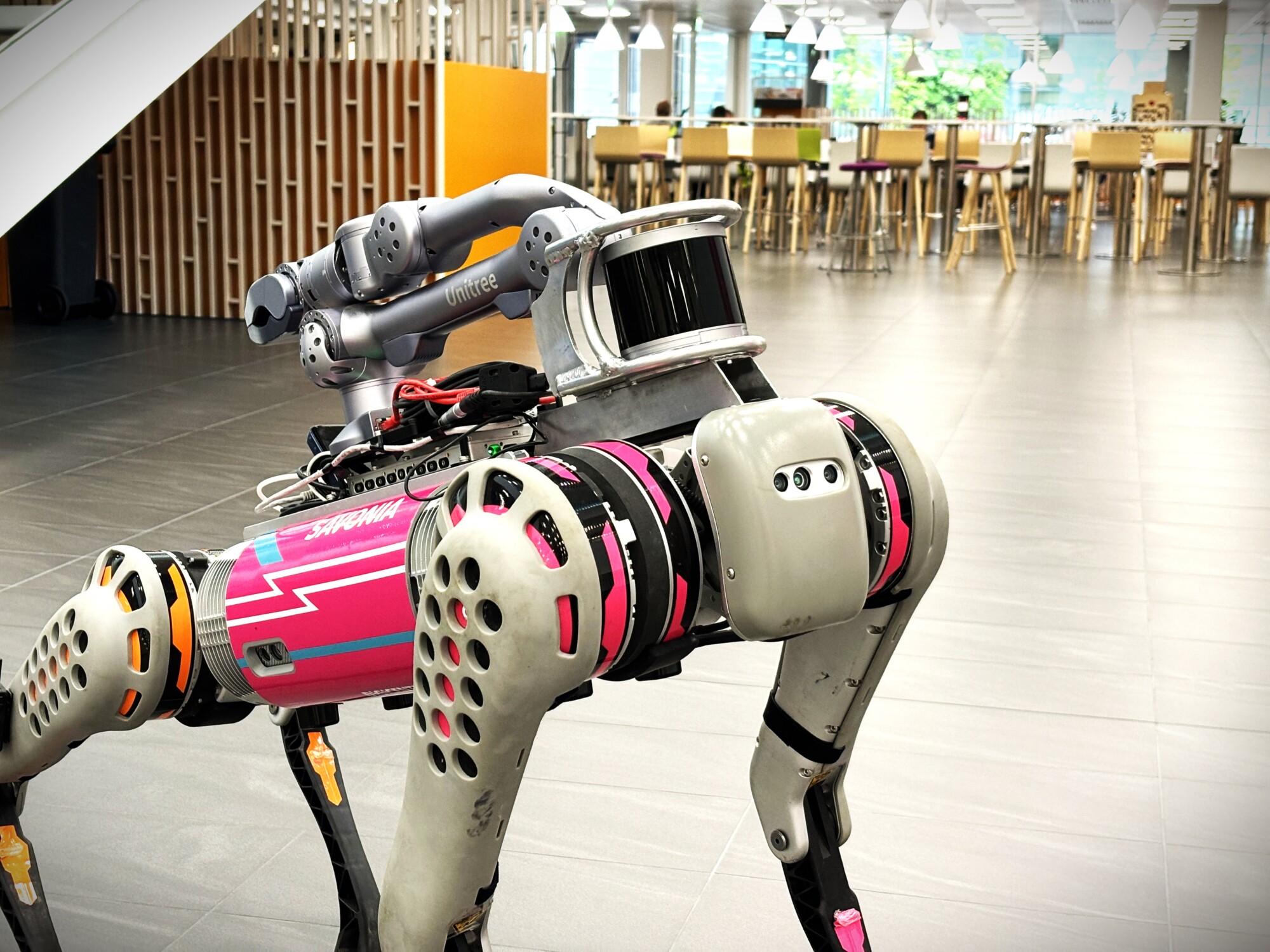

In the field of autonomous systems, navigation is a critical component, whether it’s guiding self-driving cars through city streets or enabling robots to explore complex environments. For quadruped robots, precise navigation is essential to ensure safe travel in different settings. Advanced sensors like the RoboSense Helios 32, acquired as part of the TutkA project, play a key role in obstacle detection. This article explores how configuring a lidar sensor, coupled with the power of the Robot Operating System (ROS), enables a quadruped robot to map, localize and navigate in its surroundings with precision.

Configuring the lidar sensor

Unitree B1, also known as Bob at Savonia, is able to move in difficult terrain due to its strong IMU (internal measurement unit) system and well-designed body. To enhance Bob’s ability to move autonomously between two points a RoboSense LiDAR got mounted above its robot head and configured to help Bob in the best possible way. An important step was to configure the LiDAR’s detection range by setting the minimum distance to 0.6 meters to prevent the robot from detecting itself as an obstacle. The first attempt was to make the detection area narrower, but this caused problems when mapping the area. The map started shifting, causing the robot to lose its spatial awareness and produce an inaccurate map.

Communication through ROS

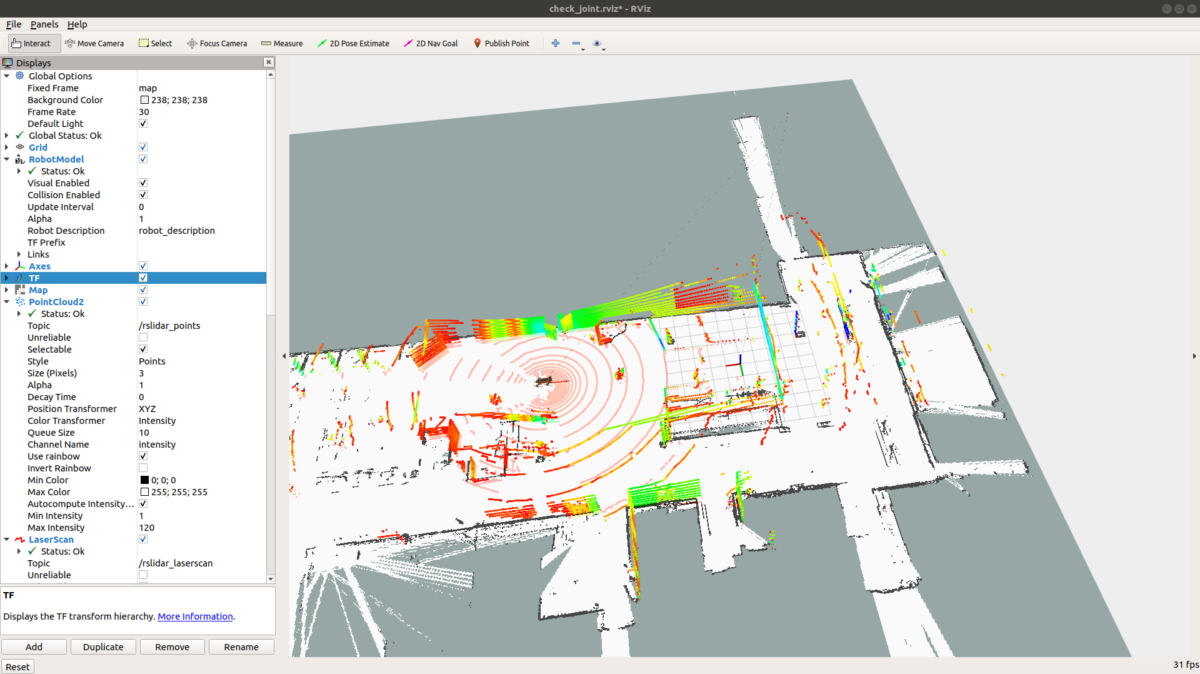

ROS played an essential part in controlling the robot and serving as a backbone of the robot’s navigation system. It offers powerful tools like RVIZ, which visualizes the point cloud data from the LiDAR in real time. ROS not only facilitates communication between different components, but also streamlines the integration of complex algorithms.

Mapping the Campus Heart

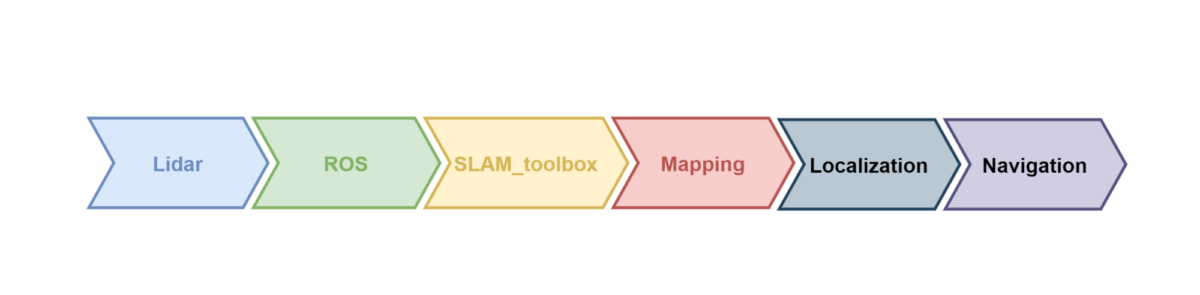

Mapping an area can be achieved through various algorithms. After experimenting with Hector SLAM (simultaneous localization and mapping) and gmapping, the SLAM toolbox got selected as the best suited method for Bob. The mapping process involved multiple steps such as different launch files for the point cloud data, laser scan data, robot model and SLAM_toolbox along with a publisher to connect the nodes together. This setup made it possible to create an accurate map of the Campus Heart.

Localization, navigation and obstacle avoidance

Next task was to localize the robot on the map and set navigation goals from the RVIZ environment. After launching an amcl-file (Adaptive Monte Carlo Localization) with the newly created map and estimating Bob’s position, the point cloud data got aligned with the room’s edges, confirming that Bob was aware of its surroundings and ready to receive navigation goals. Consequently, Bob was able to move autonomously to a given point on the map, avoiding obstacles encountered along the way.

Conclusions

After three months of working with Bob, autonomous movements got achieved first by programming them individually and then with the help of the lidar sensor. One discovery was that the more detailed the map, the better Bob was able to navigate around obstacles. However, there is still potential for refining Bob’s obstacle avoidance capabilities, promising even greater performance in the future.

This work is funded by Opetus- ja kulttuuriministeriö/Undervisnings- och kulturministeriet/Ministry of Education and Culture and done as part of the TutkA-project (TutkimusAineiston ja tutkimuksen digitalisointi – yhtenäinen tutkimusaineiston keruu- ja käsittely-ympäristö, OKM/16/523/2023).

Contributors:

Iiris Hare, Project Worker, iiris.hare@savonia.fi

Bishwatma Khanal, Project Worker, Bishwatma.khanal@savonia.fi

Osman Torunoglu, R&D Specialist, osman.torunoglu@savonia.fi

Premton Canamusa, R&D Specialist, premton.canamusa@savonia.fi

Mika Leskinen, R&D Specialist, mika.leskinen@savonia.fi

Timo Lassila, R&D Specialist, timo.lassila@savonia.fi

Aki Happonen, Project Manager, aki.happonen@savonia.fi